BM Cloud Kubernetes Services deploys Calico Opensource in all our managed Kubernetes and Openshift clusters for both policy and for pod networking.

Project Calico is an open-source project with an active development and user community. Calico Open Source was born out of this project and has grown to be the most widely adopted solution for container networking and security, powering 8M+ nodes daily across 166 countries.

BM Cloud Kubernetes Services deploys Calico Opensource in all our managed Kubernetes and Openshift clusters for both policy and for pod networking.

FD.io integrates Calico with the FD.io/VPP data plane to provide optimal performance for Containerized Networking Functions.

We have been using Calico in our Openstack clusters. By peering directly with the network infrastructure using BGP, our customers can benefit from ECMP load balancing, path redundancy, and in the future, advanced features like gslb/ IP anycast.

We are currently developing our Release-2 roadmap, and are focused on integrating Project Calico into our Managed Elastic Kubernetes Service (mEKS) product.

We recommend Calico as the default networking and security solution for customers using the Rafay Kubernetes Operations Platform. No one should run Kubernetes without Calico networking and security.

It’s extremely helpful to know what endpoints your services are talking to. We have a much-improved security posture.

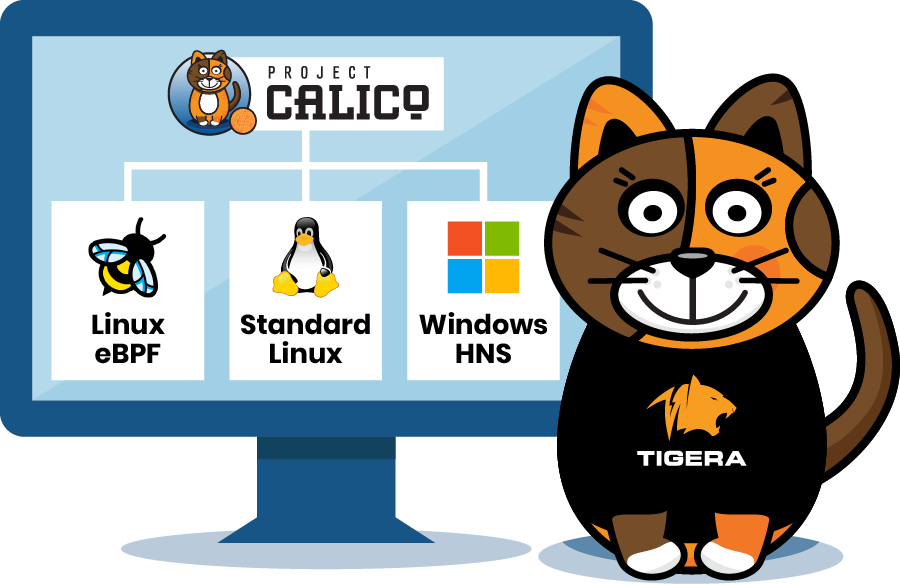

eBPF, standard Linux, and Windows data planes

Work with non-Kubernetes workloads

Built to go faster with lower CPU consumption, to help you get the best possible performance from your investments in clusters

Lock in step scalability with Kubernetes clusters without sacrificing performance

Rich network and security policy model for secure communication and WireGuard encryption

Work with the original reference implementation of Kubernetes network policy

Leverage the innovation provided by 200+ contributors from a broad range of companies

Here are some recommendations for getting involved with Project Calico. There are many paths though—the only hard rule on getting involved is that we all aim to be excellent to each other and you need to read and follow our Code of Conduct. You can and should expect to see others following it, too.

Sign up to become a member of our ambassador program, Calico Big Cats, and get a chance to share your experience with other users in the community.

Open-source networking and security for containers and Kubernetes, powering 8M+ nodes daily across 166 countries.

Learn MoreFully managed pay-as-you-go SaaS for active security for cloud-native applications running on containers, Kubernetes, and cloud. Also offered as an annual subscription.

Learn MoreSelf-managed, active Cloud-Native Application Protection Platform (CNAPP) with full-stack observability for containers, Kubernetes, and cloud. Hosted by the organization on-premises or in the public cloud.

Learn More