Editor’s note: This blog post is contributed by Giant Swarm, and is based on an earlier article published in the Kubernetes blog.

Giant Swarm’s container infrastructure started out with the goal to be an easy way for developers to deploy containerized microservices. We manage Kubernetes clusters for our customers, using an underlying technology infrastructure built on — what else? — Kubernetes!

We call this platform ‘Giantnetes’.

Giantnetes: What and How

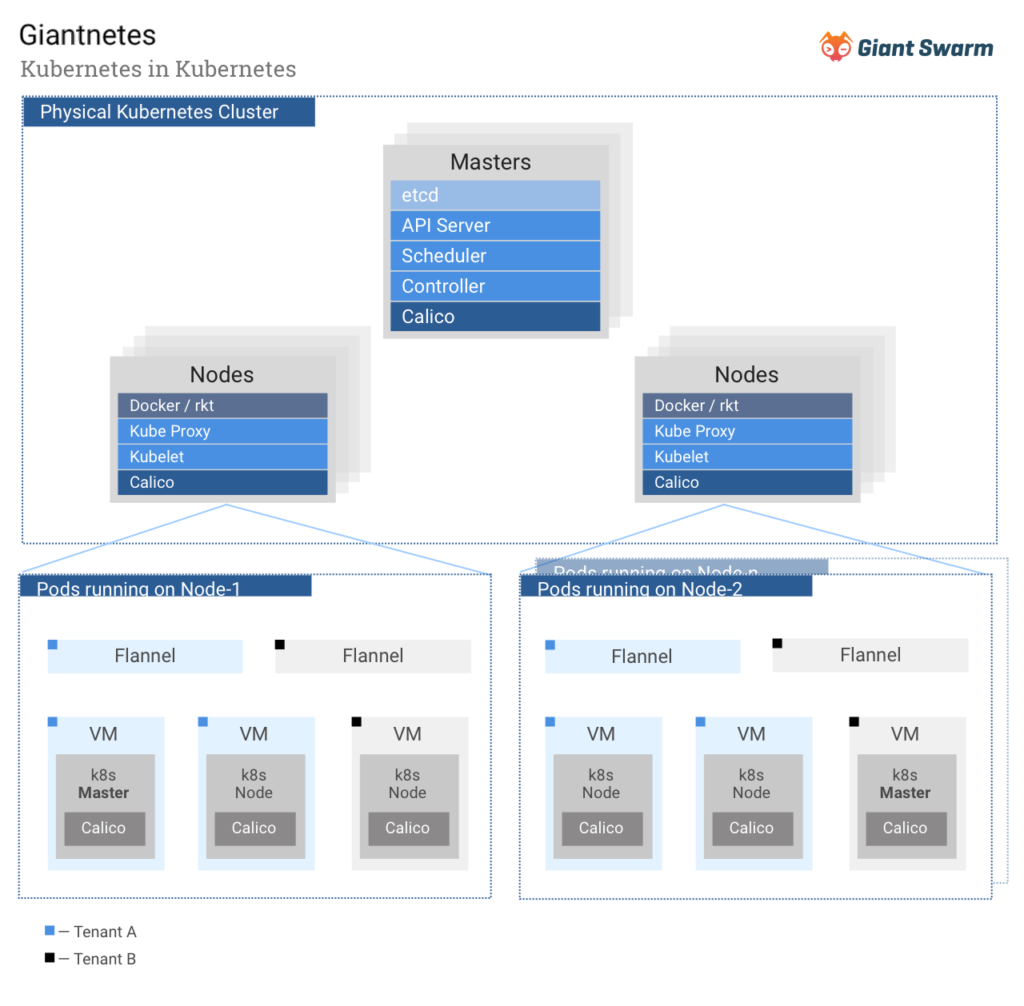

At the most basic abstraction, Giantnetes is an outer Kubernetes cluster, which is used to run and manage multiple completely isolated user Kubernetes clusters.

The Giantnetes components are self-hosted on top of CoreOS Container Linux, i.e. a kubelet is in charge of automatically bootstrapping the components. Once the Giantnetes cluster is running we use it to deploy our operators and other tooling for managing and securing the tenant Kubernetes clusters.

Networking it all Together: Calico (and Flannel)

We chose Calico as the Giantnetes network plugin to ensure security, isolation, and the right performance for all the applications running on top of Giantnetes and inside the tenant Kubernetes clusters.

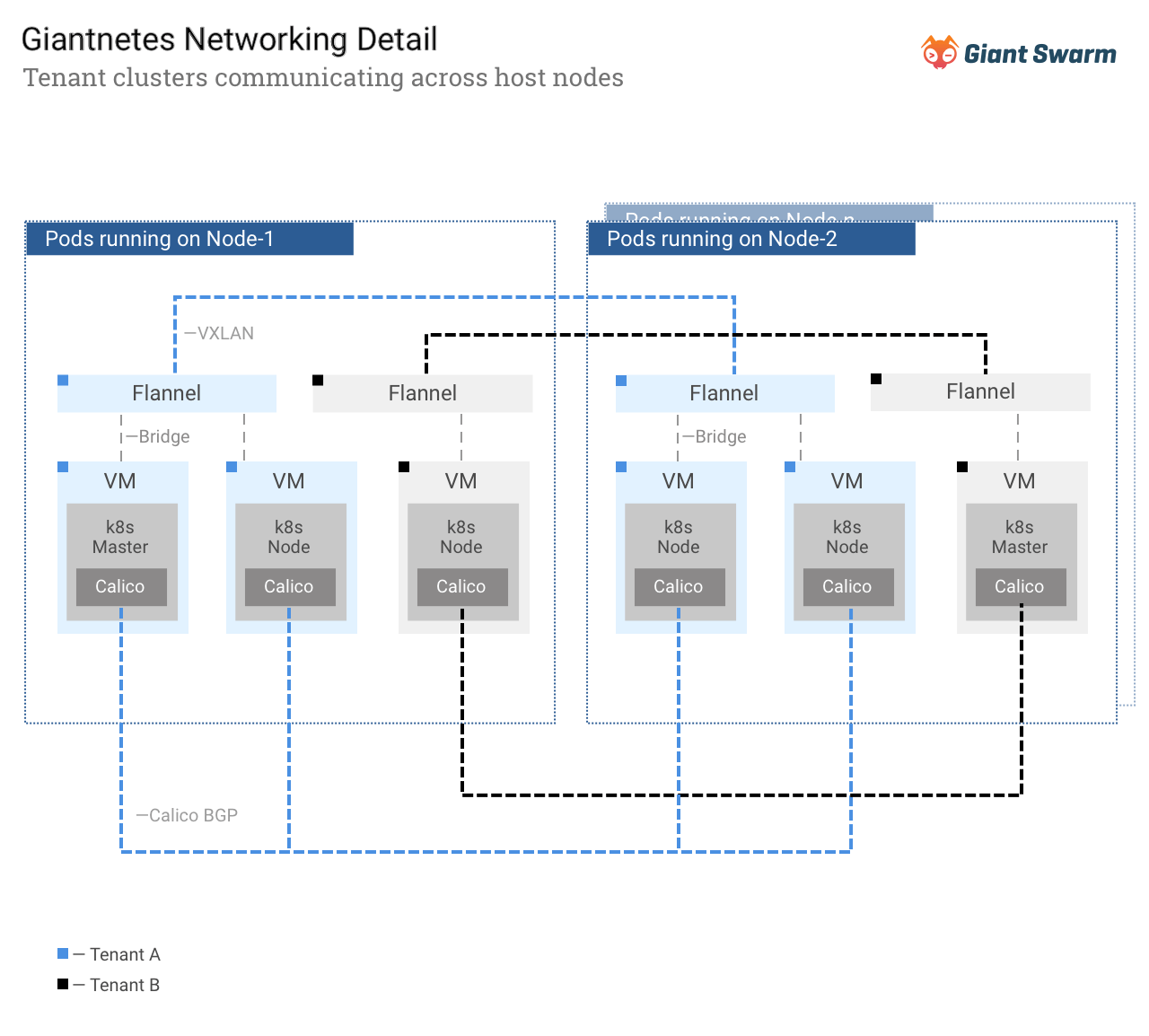

To create the inner Kubernetes clusters, the operators create certificates and tokens, and launch virtual machines for the future cluster. On-premises these VM start inside a flannel VXLAN bridge on AWS in their own VPC.

As mentioned above the networking solution for the inner Kubernetes clusters is Calico BGP. As soon as the first Kubernetes components are running we start a hosted Calico setup with the Calico nodes as a Daemonset and the Calico Policy Controller as a Deployment, which creates a single network for the Kubernetes Pods. By using Calico, we mimic the Giantnetes networking solution inside each Kubernetes cluster and provide the primitives to secure and isolate workloads through the Kubernetes network policy API.

Keeping it Secure: Encryption, Certificates, and Network Policy

Regarding security, we aim for separating privileges as much as possible and making things auditable. Currently this means we use certificates to secure access to the clusters and encrypt communication between all the components that form a cluster is (i.e. VM to VM, Kubernetes components to each other, Calico to etcd, etc). For this we create a PKI backend and CA root per cluster and then issue certificates per service on-demand using Vault. Every component uses a different certificate, thus, avoiding to expose the whole cluster if any of the components or nodes gets compromised. We further rotate the certificates on a regular basis. For customers wanting fine-grained network security, the Kubernetes network policy API is supported since under the covers the networking is implemented by Calico.

Onprem Load Balancing with Ingress, simplified by Calico

For ensuring access to the API and to services of each inner Kubernetes cluster from the outside we run nginx ingress controller setup in the Giantnetes that connects the Kubernetes VMs to hardware load balancers. The fact that Calico networks are “flat IP” without an overlay means that this setup works seamlessly, without any need to convert between the virtual and “real” network domains.

Conclusion

To sum up, Calico has proven to be the right solution for securely networking our multi-tenant Kubernetes configuration.

This setup is still in its early days and our roadmap is planning for improvements in many areas such as transparent upgrades, dynamic reconfiguration and scaling of clusters, performance improvements, and (even more) security. We see Calico as a key element in this architecture supporting these ongoing developments.

— Timo Derstappen, CTO & Puja Abbassi, Developer Advocate, Giant Swarm

Join our mailing list

Get updates on blog posts, workshops, certification programs, new releases, and more!